Testing

What, Why and Where?

- Does your code do what it should?

- Does it continue to do what it should after you modify it?

- Three months later, how do you remember what it was supposed to do anyway?

- Tests can help while you're writing the code.

Remember

Always code as if the guy who ends up

maintaining your code will be a violent

psychopath who knows where you live.

Code for readability.

I am sure the psychopath will appreciate a working test suite too.

Testing Types

- unit testing - test functionality of individual procedures

- integration testing - test how parts work together (interfaces)

- system testing - testing the whole system at a high level (black box, functional)

pytest

- Pytest can run unittest, doctest and nose style test suites

- no-boilerplate

- quick to get started, powerful to do more complex things

- plugins

- I started with nose and switched to pytest

Basic Test

In its simplest form, a pytest test is a function with test in the name and will be found automatically if test is in in the filename (details later).

%%writefile test_my_add.py

def my_add(a,b):

return a + b

def test_my_add():

assert my_add(2,3) == 5

Overwriting test_my_add.py

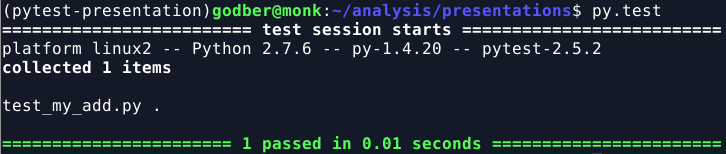

Running the basic test

Run the following command in the directory with the file:

py.test

The output would look like this:

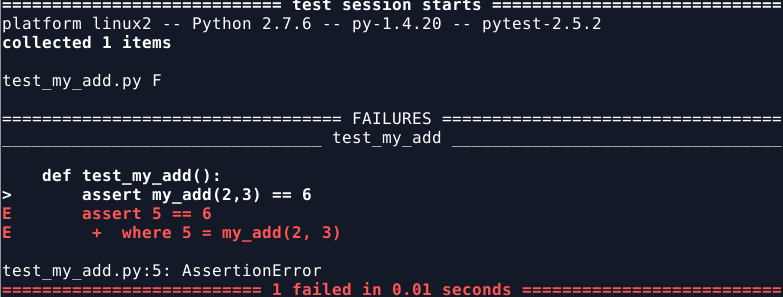

Failed Test Output

Pytest Test Discovery

How does pytest find its tests?

- Collection starts from the initial command line

- Recurse into directories, unless they match

norecursedirs test_*.pyor*_test.pyfiles, imported by their package name.- Classes prefixed with

Test(without an__init__method) - Functions or methods prefixed with

test_

Explaining the Basic Example

- Basic example file was

test_my_add.py - Executed by running:

py.test - Had it been

my_add.py - Execute by running

py.test my_add.py.

Pytest and Doctest

- By default,

pytestonly runs doctests in*.txtfiles - Add more with

--doctest-glob='*.rst' - Run doctests in module docstrings with:

py.test --doctest-modules

Two tests: a doctest and assertion based unit test.

%%writefile test_my_add2.py

def my_add(a,b):

""" Sample doctest

>>> my_add(3,4)

7

"""

return a + b

def test_my_add():

assert my_add(2,3) == 5

Overwriting test_my_add2.py

!py.test test_my_add2.py

============================= test session starts ============================== platform linux2 -- Python 2.7.6 -- py-1.4.20 -- pytest-2.5.2 collected 1 items test_my_add2.py . =========================== 1 passed in 0.01 seconds ===========================

only one test ran!?!?

!py.test test_my_add2.py --doctest-modules

============================= test session starts ============================== platform linux2 -- Python 2.7.6 -- py-1.4.20 -- pytest-2.5.2 collected 2 items test_my_add2.py .. =========================== 2 passed in 0.04 seconds ===========================

much better ... two run with --doctest-modules argument

Pytest and Python unittest

%%writefile test_my_add3.py

import unittest

def my_add(a,b):

return a + b

class TestMyAdd(unittest.TestCase):

def test_add1(self):

assert my_add(3,4), 7

Writing test_my_add3.py

!py.test test_my_add3.py

============================= test session starts ============================== platform linux2 -- Python 2.7.6 -- py-1.4.20 -- pytest-2.5.2 collected 1 items test_my_add3.py . =========================== 1 passed in 0.03 seconds ===========================

runs as you would expect, without modification.

Reflection

Just to be clear ...

- use of doctest and unittest are optional

- legacy perhaps

- tests can be implemented as shown in the base example

- mix and match even

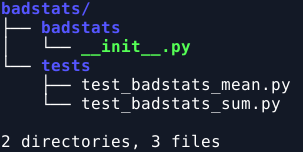

Split your tests out into a separate file

Into a test/ subdirectory even

Like so:

Pytest Assertions

- Python

assertstatement - Expecting exceptions:

pytest.raises() - Expecting failure (coming up)

New Example

The following badstats module will be used in upcoming examples:

%load badstats/badstats/__init__.py

def _sum(data):

total = 0

for d in data:

total += d

return total

def mean(data):

n = len(data)

return _sum(data) / n

%load badstats/tests/test_badstats_sum_a.py

from badstats import _sum

def test_sum_simple():

data = (1, 2, 3, 4)

assert _sum(data) == 10

def test_sum_fails():

data = (1.2, -1.0)

assert _sum(data) == 0.2

!py.test badstats/tests/test_badstats_sum_a.py

============================= test session starts ==============================

platform linux2 -- Python 2.7.6 -- py-1.4.20 -- pytest-2.5.2

collected 2 items

badstats/tests/test_badstats_sum_a.py .F

=================================== FAILURES ===================================

________________________________ test_sum_fails ________________________________

def test_sum_fails():

data = (1.2, -1.0)

> assert _sum(data) == 0.2

E assert 0.19999999999999996 == 0.2

E + where 0.19999999999999996 = _sum((1.2, -1.0))

badstats/tests/test_badstats_sum_a.py:9: AssertionError

====================== 1 failed, 1 passed in 0.01 seconds ======================

Floating Point is Hard :-/

- Fix it later

- keep the test as a reminder

- but mark it as expected to fail with

xfail.

%load badstats/tests/test_badstats_sum_b.py

import pytest

from badstats import _sum

def test_sum_simple():

data = (1, 2, 3, 4)

assert _sum(data) == 10

@pytest.mark.xfail

def test_sum_fails():

data = (1.2, -1.0)

assert _sum(data) == 0.2

!py.test badstats/tests/test_badstats_sum_b.py

============================= test session starts ============================== platform linux2 -- Python 2.7.6 -- py-1.4.20 -- pytest-2.5.2 collected 2 items badstats/tests/test_badstats_sum_b.py .x ===================== 1 passed, 1 xfailed in 0.01 seconds ======================

Pytest Xfail, Conditions and More

- Modfying test execution using

pytestmodule - Use of the decorator

@pytest.mark.xfail. - Skip tests with conditionals and

@pytest.mark.skipif - Custom Markers:

@pytest.mark.NAME@pytest.mark.webtestpy.test -v -m webtest

- See mark documentation

Extended Xfail Example

- Variable Use

- Xfail conditional (and reason)

%load badstats/tests/test_badstats_sum_c.py

import pytest

import sys

from badstats import _sum

xfail = pytest.mark.xfail

def test_sum_simple():

data = (1, 2, 3, 4)

assert _sum(data) == 10

@xfail(sys.platform == 'linux2', reason='requires windows')

def test_sum_fails():

data = (1.2, -1.0)

assert _sum(data) == 0.2

!py.test badstats/tests/test_badstats_sum_c.py

============================= test session starts ============================== platform linux2 -- Python 2.7.6 -- py-1.4.20 -- pytest-2.5.2 collected 2 items badstats/tests/test_badstats_sum_c.py .x ===================== 1 passed, 1 xfailed in 0.01 seconds ======================

Parametrize

When you want to test a number of inputs on the same function, use @pytest.mark.parametrize.

%load badstats/tests/test_badstats_sum_param.py

import pytest

from badstats import _sum

@pytest.mark.parametrize("input,expected", [

((1, 2, 3, 4), 10),

((0, 0, 1, 5), 6),

pytest.mark.xfail(((1.2, -1.0), 0.2)),

])

def test_sum(input, expected):

assert _sum(input) == expected

!py.test badstats/tests/test_badstats_sum_param.py

============================= test session starts ============================== platform linux2 -- Python 2.7.6 -- py-1.4.20 -- pytest-2.5.2 collected 3 items badstats/tests/test_badstats_sum_param.py ..x ===================== 2 passed, 1 xfailed in 0.01 seconds ======================

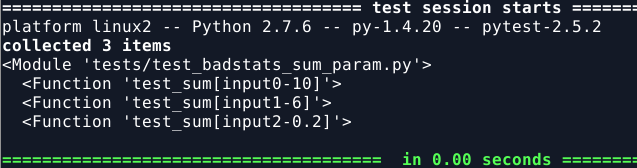

Note there appear to have been three tests executed.

You can inspect what tests are 'found' by using the py.test --collect-only.

Pytest Fixtures

"The purpose of test fixtures is to provide a fixed baseline upon which tests can reliably and repeatedly execute. pytest fixtures offer dramatic improvements over the classic xUnit style of setup/teardown functions."

However, classic xUnit style Setup/teardown functions are still available.

%load badstats/tests/test_badstats_fixture.py

import pytest

import badstats

@pytest.fixture

def data():

return (1.0, 2.0, 3.0, 4.0)

def test_sum_simple(data):

assert badstats._sum(data) == 10.0

def test_mean_simple(data):

assert badstats.mean(data) == 2.5

!py.test badstats/tests/test_badstats_fixture.py

============================= test session starts ============================== platform linux2 -- Python 2.7.6 -- py-1.4.20 -- pytest-2.5.2 collected 2 items badstats/tests/test_badstats_fixture.py .. =========================== 2 passed in 0.01 seconds ===========================

Running Tests on Module Code

Environment setup can differ somewhat, but if you wanted to run the code shown above you have two choices:

- Install

badstatsmodule withpip install -e . - Run pytest in

badstatstop directory with PYTHONPATH set:PYTHONPATH=. py.test

Other Things

- Stand Alone Self Encapsulated Tests:

py.test --genscript=runtests.py - Stop output capture (see

print()statements):py.test -s

Advanced Topics for Lightning Talks

- Grouping Tests in Classes

- xUnit Style Setup/Teardown

- Understanding Fixture Details: (scope, finalize, parameterization)

- Integration with other tools:

tox,setuptools - Advanced Reporting

- Handling Command Line Options

- Plugins and configuration using

conftest.py